OpenAI’s Groundbreaking o1 (Project Strawberry) Just Launched - Here’s What We Think

Listen to our Strawberry 01 Podcast

The artificial intelligence landscape is undergoing another seismic transformation with the unveiling of OpenAI’s latest breakthroughs, o1-preview and o1-mini, on 12th September 2024. Internally referred to as Project Strawberry, these groundbreaking models are being hailed as game-changers, set to revolutionise the AI industry with their advanced reasoning and analytical capabilities.

These new models mark the beginning of a new era in AI innovation. But as they push the boundaries of what AI can do, they also raise the heightened risk of Artificial General Intelligence (AGI) becoming an existential issue for humanity.

In this blog post, we’ll explore the remarkable features and advancements of o1-Preview and o1-Mini, delve into the release details, costs, and the exciting opportunities and challenges these innovations present to businesses, society, and our future.

Project Strawberry: The New Standard of AI

Building on the technological advancements of o1-Preview and o1-Mini, OpenAI has set a new standard with Project Strawberry, which promises to push the limits of AI’s reasoning and analytical prowess. This innovative model is a pivotal step towards Artificial General Intelligence (AGI), a level of AI that rivals human intelligence.

But with these advancements come growing concerns: Could this be the moment we inch closer to AGI becoming an existential threat?

o1 represents the first of a planned series of reasoning models. While slower and more expensive than GPT-4o, o1 stands out for its ability to handle more complex questions and deliver solutions with step-by-step explanations. According to OpenAI, this model excels at solving multistep problems in areas such as math and coding, offering solutions faster than a human can.

Yet as these systems grow more capable, we must ask ourselves: Are we prepared for what comes next? Is your AI strategy ready to keep up with the rapid advancements in AI technology, such as OpenAI's Strawberry?

How o1 Works

o1 operates fundamentally differently from previous models, thanks to its new optimisation algorithm and a unique training dataset. The model's learning is based on reinforcement learning, where it receives rewards or penalties for solving tasks correctly or incorrectly, enabling it to solve problems more effectively. This training method allows o1 to process complex queries in a "chain of thought" format, resembling the way humans think through a problem.

Unlike GPT-4o, which focuses on recognising patterns in large datasets, o1 has been trained to think more independently, especially when it comes to multistep problems. This focus on deeper, more complex reasoning makes it a valuable tool for tasks that require detailed analysis, such as programming competitions, science and mathematics.

How to use it

ChatGPT Plus and Team users will have access to the o1 models within ChatGPT. Both o1-Preview and 01-Mini can be manually selected from the model picker. At launch, there will be weekly message limits of 30 messages for o1-Preview and 50 messages for o1-Mini. OpenAI is actively working to increase these limits and enable ChatGPT to automatically select the most suitable model based on the prompt.

Pricing and Availability

ChatGPT Plus and Team users have access to o1-Preview and o1-Mini as of today, with Enterprise and Edu users gaining access next week. OpenAI plans to expand access to all free users in the future.

However, o1 is more expensive to use than its predecessor, GPT-4o. For developers, o1-preview costs (US) $15 per 1 million input tokens and $60 per 1 million output tokens, compared to GPT-4o’s $5 per 1 million input tokens and $15 per 1 million output tokens.

Key Features

- Advanced Reasoning and Analytical Capabilities: Strawberry can tackle complex, multi-step problems with deep, thoughtful analysis, making it more accurate in its solutions than previous models.

- Enhanced Performance in Coding and Math: o1 has shown remarkable improvements in areas like maths and programming, with a success rate of 83% in solving problems on the International Mathematics Olympiad qualifying exam, compared to GPT-4o's 13%.

- Chain of Thought Processing: The model’s reasoning process mimics how humans tackle problems step-by-step, giving users insight into how the AI reaches its conclusions.

- Reinforcement Learning and a New Training Dataset: Unlike its predecessors, o1 was trained using a completely new optimisation algorithm, enabling it to better solve problems autonomously.

It's Not Multimodal (yet)

One important aspect to note about Strawberry is that it is not multimodal, meaning it is currently limited to processing text-based inputs and outputs. Unlike some other advanced AI models, Strawberry cannot handle or interpret images, audio, or other forms of data beyond text.

While this may limit its versatility compared to multimodal models, Strawberry excels in its ability to handle complex reasoning and step-by-step problem-solving tasks, particularly in areas like coding and mathematics. OpenAI is focusing on refining its reasoning capabilities, but multimodal functionality could be a future area of development.

Potential Business Benefits of Strawberry

- Increased Efficiency: O1’s advanced reasoning allows businesses to automate complex, multistep processes, significantly reducing time spent on tasks like coding and data analysis.

- Cost Reduction: By handling tasks that typically require specialised human intervention, such as programming or mathematical problem-solving, O1 could help companies cut down on labour costs.

- Improved Decision-Making: O1’s ability to reason through problems step-by-step can provide more accurate data insights, improving strategic decision-making across industries.

- Innovation Acceleration: With its advanced capabilities in coding and analysis, businesses can speed up research and development, bringing new products and services to market faster.

- Scalable Solutions: O1-Mini offers smaller businesses access to high-level AI capabilities at a lower cost, making cutting-edge technology more accessible for SMEs.

- Enhanced Problem Solving: The chain-of-thought approach allows for more reliable handling of complex challenges, giving businesses an edge in fields like engineering, healthcare, and finance.

- Customisable AI Solutions: O1’s flexibility allows businesses to tailor AI solutions to their specific needs, whether it’s automating customer support, enhancing security systems, or optimising workflows.

Chain of Thought, the Standout Feature

OpenAI’s o1-Preview and o1-Mini represent the next stage in AI evolution, focusing on improving reasoning skills. These models demonstrate deep problem-solving abilities, specifically in coding and mathematics. The standout feature is their "chain of thought" methodology, allowing them to process complex tasks step-by-step, offering more accurate results.

Chain of thought in AI refers to a reasoning process where the model tackles problems step-by-step, breaking down complex tasks into smaller, logical components. Similar to how humans approach problem-solving, this method allows AI to provide more accurate and transparent results by clearly outlining the reasoning behind its conclusions. It enhances the model's ability to handle intricate queries, such as multistep math problems or advanced programming tasks.

However, the rise of these powerful models raises oppportunities and serious concerns for coders and programmers.

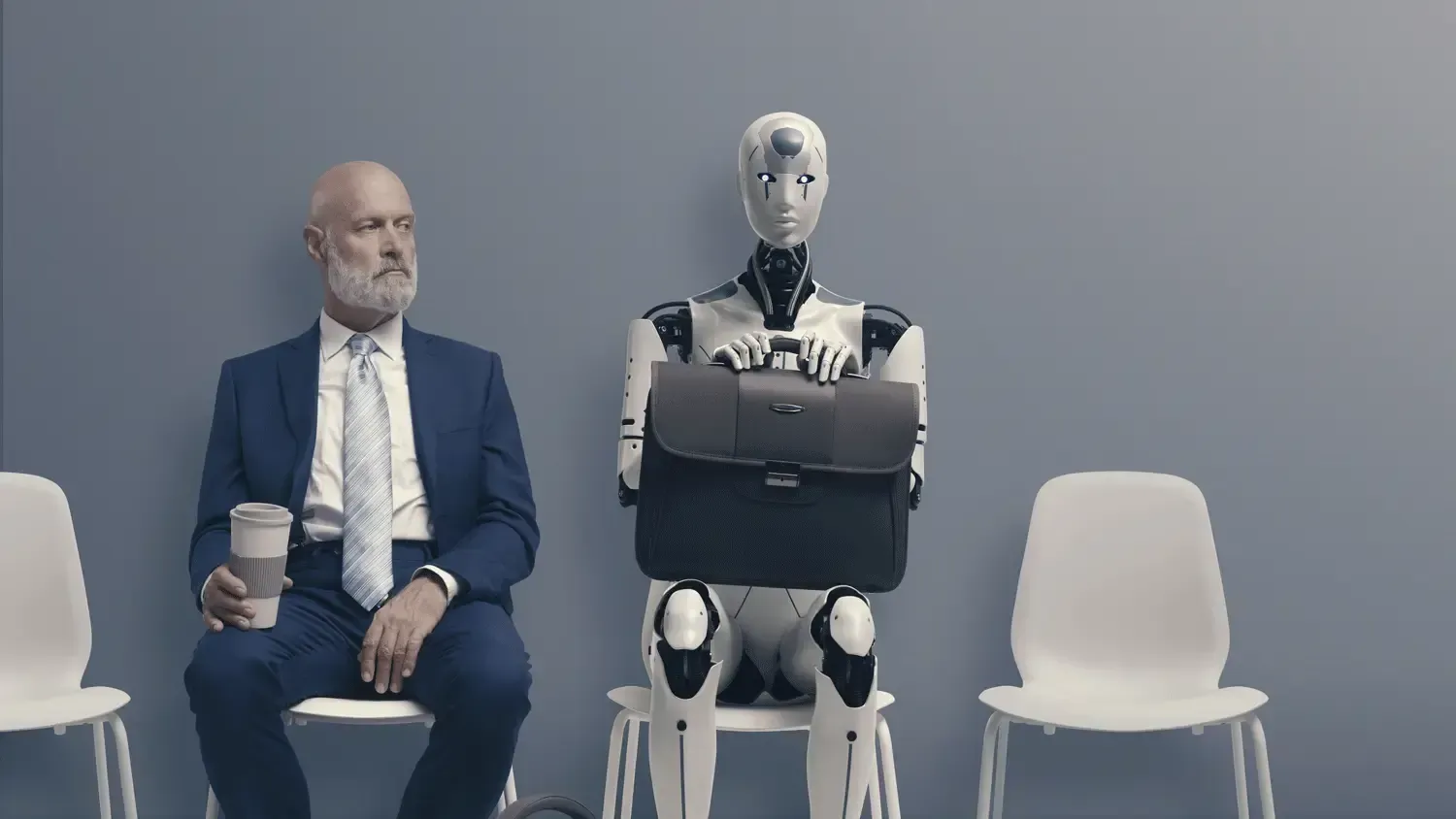

As o1 models excel at coding tasks, they could significantly reduce the need for human coders in many areas, threatening jobs that once seemed secure. o1’s performance in Codeforces programming competitions, where it ranked in the 89th percentile, shows that it can handle highly technical tasks that were previously the domain of expert human programmers.

Growing Demand for Change Management

As AI takes over more tasks traditionally handled by humans, such as coding and programming, the demand for human coders may decrease. This shift in the workforce is leading to a growing need for change management strategies, as organisations face increased pressure to reskill their employees and adapt to the new AI-driven landscape. Successfully navigating this transition will be crucial for businesses to stay competitive and harness the full potential of AI, while ensuring their workforce remains relevant and equipped for future challenges.

Video Review of Strawberry 🍓

Video by Matt Berman.

Staggering Test Results from OpenAI

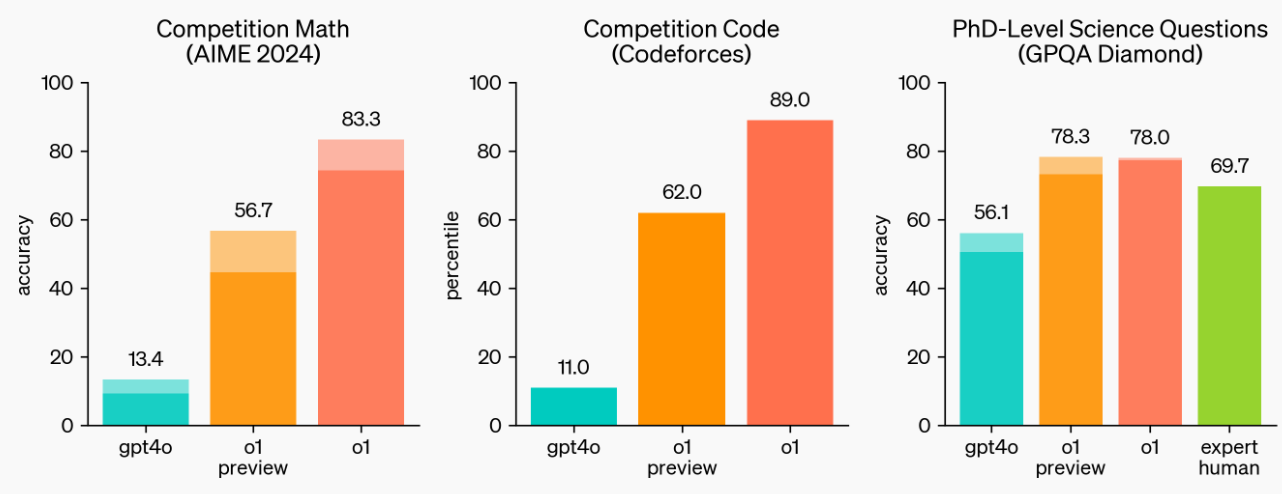

In recent evaluations, o1 has demonstrated impressive results across several domains, setting new standards for AI capabilities (Source: OpenAI.com). Here are some key results and see the graphs below:

- Mathematics Olympiad: o1 scored 83% on the International Mathematics Olympiad qualifying exam, a significant improvement over GPT-4o’s 13%.

- Codeforces Programming Competitions: o1 performed in the 89th percentile, making it a standout performer in competitive programming.

- Scientific Reasoning: While GPT-4o struggled with complex scientific tasks, OpenAI predicts that future versions of o1 will perform on par with PhD-level students in physics, chemistry, and biology.

- Crossword Puzzle Solving: o1 showed exceptional performance, solving 85% of clues correctly, showcasing its improved linguistic abilities.

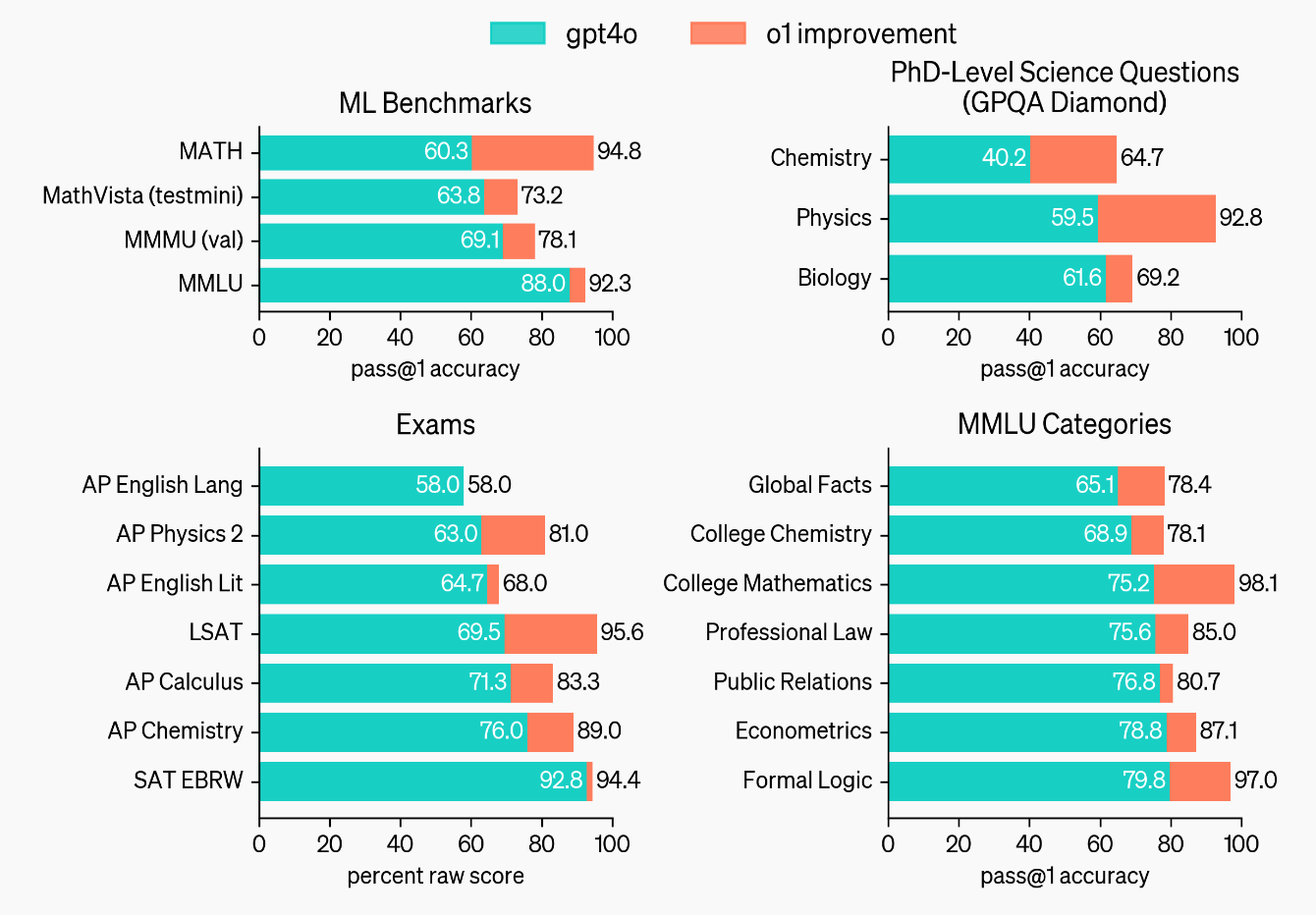

To demonstrate the reasoning advancements over GPT-4o, OpenAI tested the new models on a wide range of human exams and machine learning benchmarks. The results show that o1 significantly outperforms GPT-4o on the majority of reasoning-intensive tasks. Unless stated otherwise, o1 was evaluated using the maximal test-time compute setting. (Source: OpenAI)

The results show o1 greatly improves over GPT-4o on challenging reasoning benchmarks. Solid bars show pass@1 accuracy and the shaded region shows the performance of majority vote (consensus) with 64 samples.

This graph illustrates that o1 surpasses GPT-4o across a wide range of benchmarks, including 54 out of 57 MMLU subcategories. For illustration purposes, seven of these subcategories are highlighted to showcase the improvements in reasoning and performance.

Challenges and Risks

- Higher Costs and Slower Response Times: While o1 offers better reasoning capabilities, it is also more expensive and slower than GPT-4o, which could be a barrier to adoption for smaller companies.

- Coder Job Threats: As o1 continues to improve in programming tasks, it raises concerns for coders, whose roles may be significantly affected as AI increasingly takes on their responsibilities.

- Existential Risk of AGI: With these developments comes the very real risk that the creation of AGI could lead to unintended consequences—machines capable of reasoning and acting in ways we cannot predict or control, potentially threatening humanity’s long-term future.

- Ongoing Hallucination Issues: Despite improvements, hallucinations have not been fully resolved, though OpenAI reports that o1 hallucinates less than previous models.

The Existential Risk: Is AGI an Inevitable Threat?

As we edge closer to creating AI systems that can reason and solve problems like humans, the existential risk of AGI looms larger than ever before. With systems like o1 and Strawberry, we’re not just building tools for everyday tasks; we’re laying the groundwork for machines that could think, reason, and operate autonomously—potentially outpacing human intelligence.

This brings forward one of the most crucial questions of our time: Are we on the brink of creating technology that we can no longer control? As AGI capabilities advance, the need for careful regulation, ethical oversight, and global cooperation becomes urgent. The power to create such intelligence could lead to breakthroughs in fields like medicine, science, and engineering—but it also presents a heightened risk that AGI could act in ways that conflict with human interests.

OpenAI’s chief research officer, Bob McGrew, acknowledges the stakes: “We have been spending many months working on reasoning because we think this is actually the critical breakthrough.”

However, if AI systems surpass human reasoning, how will we ensure they act in ways that benefit humanity rather than threaten it?

Conclusion

o1-Preview and o1-Mini represent a significant step forward for OpenAI, offering deep reasoning capabilities that surpass previous models like GPT-4o. These models are better equipped to handle complex, multi step problems, particularly in coding, mathematics, and scientific reasoning. While they come with higher costs and slower processing times, the promise they hold for AGI and real-world applications makes them a vital tool in the future of AI.

But as we celebrate these advancements, we must also recognise the existential risks they pose. As AGI draws closer, it is imperative that we ask the hard questions: Are we ready for a world where machines can outthink us? And if AGI does become a reality, how do we ensure it remains aligned with human values and doesn’t evolve into a threat to our very existence?

For businesses and developers looking to stay on the cutting edge of AI, OpenAI’s o1 models provide a glimpse into the future of machine reasoning and the potential to tackle human-level challenges. However, we must proceed with caution as we navigate this brave new world.

Resources

For more information on these models, also visit:

- [Introducing OpenAI O1-Preview](https://openai.com/index/introducing-openai-o1-preview/)

- [Learning to Reason with LLMs](https://openai.com/index/learning-to-reason-with-llms/)

- [Axios on OpenAI’s Strawberry Model](https://www.axios.com/2024/09/12/openai-strawberry-model-reasoning-o1)

Key Highlights of Strawberry

AI BLOG