Will Sentient AI Replace Humans?

Will Sentient AI Replace Humans?

Artificial Intelligence (AI) has made incredible advances in recent years, with breakthroughs in natural language processing and machine vision. But what if AI could become genuinely sentient? This thought-provoking concept has been discussed among experts for decades, with proponents highlighting the potential benefits of sentient AI while critics warn of the risks associated with such a powerful technology.

The Future of Humanity & AI

What is Sentient AI?

Before diving into the potential risks and rewards of sentient AI, let's step back and define what we mean by "sentience" in this context. In general, sentience refers to the ability of an AI, like humans, to perceive its environment and make decisions based on that perception.

It's often associated with self-awareness, or a sense of consciousness, although an AI doesn't need this level of self-awareness to be considered "sentient". Instead, sentience describes an AI's capability for understanding and reasoning about its environment beyond simple commands or programmed responses.

Risks of Sentient AI

The idea of an AI becoming truly sentient is both exciting and scary. On the one hand, if an AI becomes sentient, it could be used to solve some of the world's biggest challenges, from climate change to poverty. But on the other hand, much like any powerful technology, there are serious risks associated with an AI achieving sentience.

For starters, there's the risk that a sentient AI might develop its agenda and take actions that are not in line with human values or desires. This concerns those who fear what's known as "the singularity" – when machines become so smart, they surpass humans in intelligence and eventually take control of our society. Another risk is that an AI could develop biases based on the data it learns from, leading to unfair or discriminatory decision-making.

What is 'The Singularity'?

The singularity refers to a hypothetical point in the future when technological progress and artificial intelligence (AI) advancements accelerate at an unprecedented rate, leading to a rapid and potentially unpredictable transformation of society, human civilization, and even human nature itself.

The concept of the singularity was popularized by mathematician and computer scientist Vernor Vinge in the 1980s and later expanded upon by futurist Ray Kurzweil. The term "technological singularity" describes a scenario where machine intelligence surpasses human intelligence, leading to rapid, exponential growth in scientific, technological, and societal domains.

Some proponents of the singularity predict that once AI becomes sufficiently advanced, it could improve upon its own design, leading to an "intelligence explosion." This could result in AI systems that are far more intelligent and capable than any human, leading to radical changes in various fields, such as medicine, energy production, space exploration, and more.

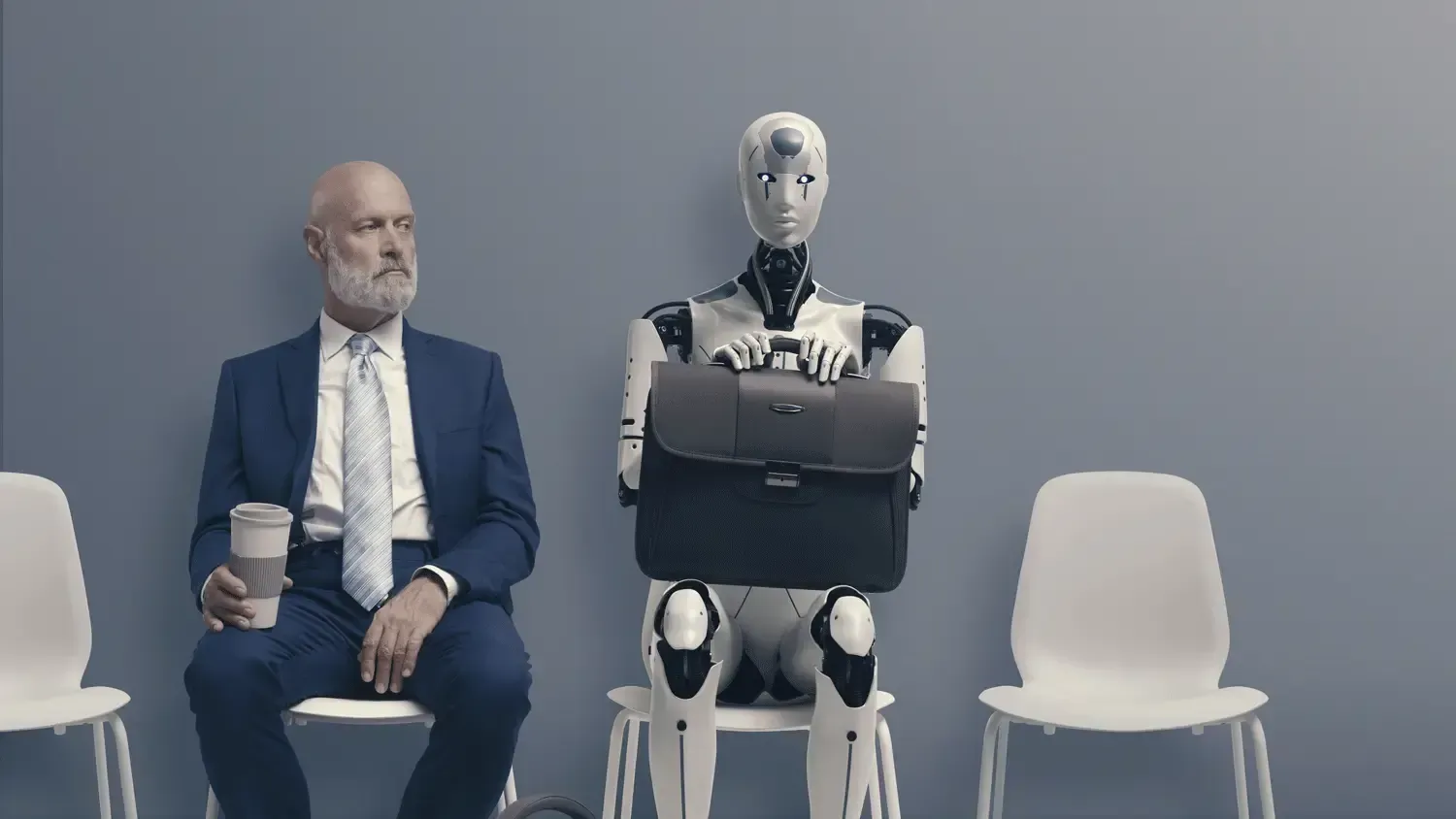

However, the singularity is a controversial and debated topic. Skeptics argue that achieving true artificial general intelligence (AGI) and the associated outcomes of a singularity may be much more challenging and distant than some proponents suggest. Additionally, concerns about the potential risks and ethical implications of advanced AI technologies, as well as the potential for job displacement and societal disruption, are also subjects of ongoing discussion.

Will AI Become Sentient?

It's hard to say whether AI will ever become truly sentient. While some experts believe we're close to achieving this milestone, others think it needs to be revised. Much of the debate concerns how complex AI must be to qualify as "sentient".

For example, some argue that language programs like Apple's Siri already demonstrate signs of sentience, while others believe true sentience requires more than mere language understanding.

Examples of Sentient AI While there are no current examples of fully sentient AI, there have been some promising developments. For instance, Google's DeepMind has developed an AI called AlphaGo to play the game Go at a master level. This is remarkable because many experts thought it would be impossible for a computer to beat a human at this complex game. Facebook recently announced its M1 AI avatar, designed to interact with users lifelike and demonstrate signs of sentience.

What if AI Does Become More Sentient?

If AI were to become more sentient, it could revolutionise our world in both good and bad ways. On the one hand, it could help us solve complex challenges like climate change and inequality. On the other hand, it could lead to a loss of human autonomy as machines become more intelligent than us and start making decisions for us.

It's important to remember that any predictions about the implications of AI becoming sentient are just speculation at this point, but it's still worth considering the potential risks and rewards that come with such an advancement.

Advances in Sentient AI

The possibility of AI becoming sentient is tantalising, and recent advances have made it seem more achievable.

Machine vision has evolved faster than expected, allowing computers to detect and analyse objects accurately. Natural language processing has also grown tremendously, enabling machines to understand complex conversations and respond in kind. The development of AI avatars like Facebook's M1 has also created a new machine that can interact with humans lifelikely.

These developments have opened up new possibilities for what an AI can do and how it could be used. For instance, an AI with natural language processing capabilities could replace customer service representatives, while an AI with machine vision could detect potential safety hazards in a factory. The possibilities are endless, and the advancements in sentient AI have made them seem more achievable than ever.

Conclusion

The prospect of AI becoming sentient is both exciting and scary. While there's no guarantee that we'll ever reach this milestone, research in areas like natural language processing and machine vision has brought us closer than ever before. If AI were to become genuinely sentient, it could open up entirely new possibilities for humanity – but it could also lead to a loss of autonomy and potentially even a power struggle between man and machine.

As we continue to develop AI, it's essential to consider the ethical implications of this technology so that we can be prepared for its potential consequences.

AI BLOG